<Tl;Dr>

We will use OpenRouter to get usage based pricing for our LLM's and combine this with Open WebUI to use it.

</Tl;Dr>

Open WebUI should be a well known tool for everyone interested in SelfHosting LLM's.

For everyone else, it's basically an "insert your favorite LLM Platform here" frontend, which looks nearly identical to ChatGPT and others.

Quick sidenote, this blog post is not about SelfHosting a LLM. I don't have a GPU lying around.

But we will look at how you can cancel your LLM Subscriptions and still use all of them, or even more at a probably cheaper price.

Why should I care?

If you're paying for an AI subscription, you know they can be quite expensive. OpenAI Plus is €23 a month, Google AI Pro is €17 a month and Anthropic is €17 as well.

Cheaper solutions surely exist, like t3.chat for $8 a month, which is good value, if you use up all your credits.

But there it is, IF you use all your credits. And that's exactly what got me started on this project.

What I want

What annoyed me was the pricing model of most AI platforms out there.

Pay us a fixed monthly price, you'll get a fixed amount of monthly credits, but every credit you don't use you'll lose.

I don't use AI often, and I'm satisfied by cheaper models like Gememi 2.5 Flash. So I barely used 1/4 of my available credits.

So I started looking for a provider which has usage based pricing. Pay what you use, and a little extra for them to stay alive. And that's where OpenRouter comes into play. They're a platform which aggregates access to over 300 AI Models and pass through the actual price of the Models API. Also, they only charge you for what you use.

In used my setup for roughly 30 days and used $0.40 in credits. $0.30 of them were used on the first day to test everything, also I tried some expensive models. After that I mostly stuck to Gemini 2.5 Flash which is cheap and "smart" enough for my uses.

The Project

We now have an API Provider (OpenRouter) and we have a frontend for it (Open WebUI). So let's begin the setup, and trust me, it's easy!

First, create yourself an account on OpenRouter.

After registering, navigate to your credits settings. There you need to buy some credits. They are displayed in USD.

After topping up your account, you'll need to generate an API Key. You can set a usage limit per key if you want, but it's not required.

After creating your token, take note of it, we'll use it later.

Now to the fun part: To set up Open WebUI we'll gonna use docker compose.

services:

openwebui:

image: ghcr.io/open-webui/open-webui:main

ports:

- "8080:8080"

volumes:

- ./data:/app/backend/data

restart: unless-stopped

After starting the compose file with `docker compose up -d` you should be able to access it on port 8080 and set up your account.

The only thing left is to connect Open WebUI with OpenRouter.

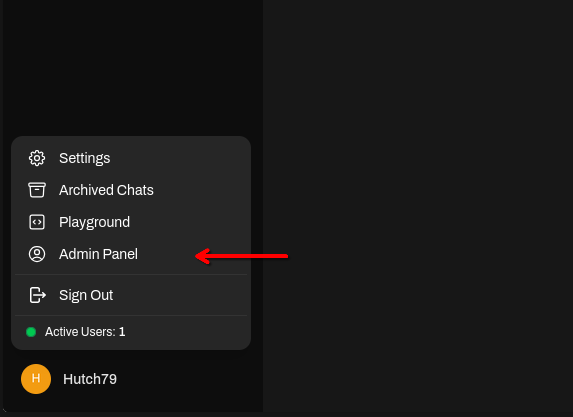

For this, well go into the admin settings. You'll find it in the bottom left by clicking on your name.

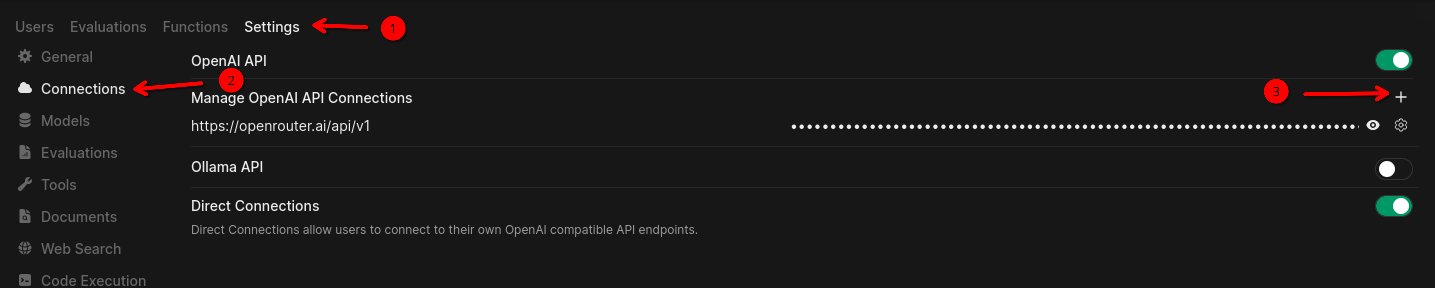

Well then need to navigate to "Settings > Connections" where we can add a new OpenAI API configuration.

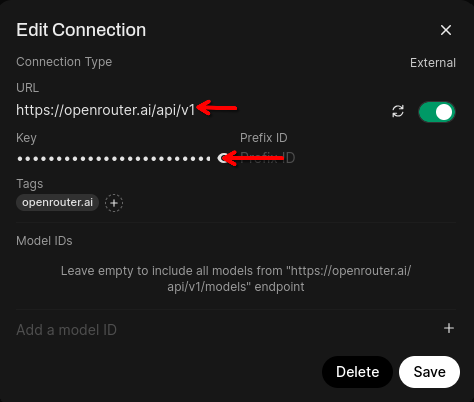

Here you'll need to enter your previously generated API Key and the API URL, which is https://openrouter.ai/api/v1.

The Tag section is optional and up to you.

After this, click Save and you should be able to chat with an AI Model of choice.

Advanced configuration for cost saving

If everything works as expected, we can configure Open WebUI to save some tokens.

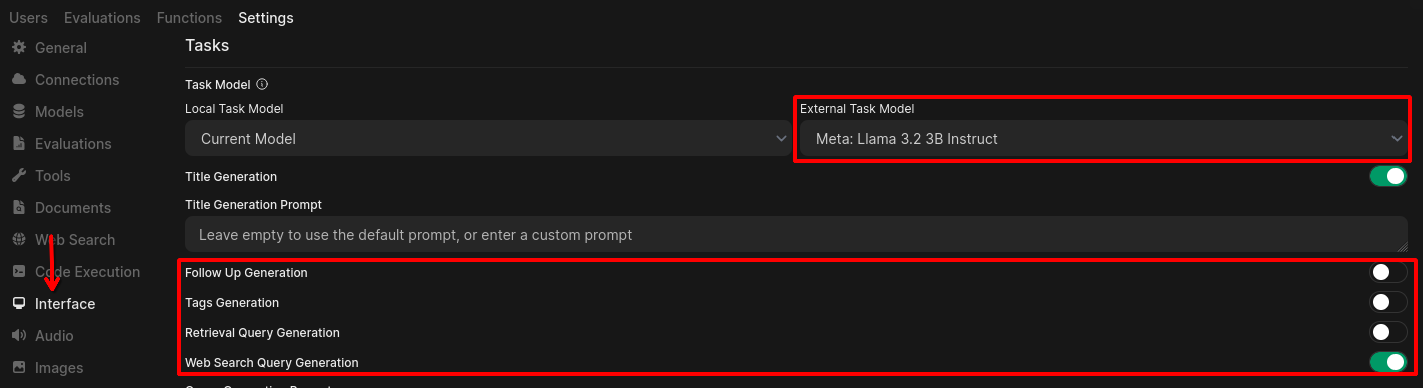

Chat titles, Tags and message suggestions are generated by the currently selected model.

This can get expensive, especially if you use reasoning models. But even if not, a chat title does not need to be generated by a state of the art, top of the line model. A simple, small and cheap model is plenty enough.

The single most important change is the "External task Model". This is the model which executes the tasks listed below. By changing this to a cheap model like "Meta Llama 3.2 3B Instruct" you can easily save some credits and use them for actually useful messages. My selected model costs $0.10 per 1 Million tokens, so way less than Gemini or others.

The other settings, like Tag generation or Follow-up generation, are up to you. I personally don't need them, therefore I disabled them. If you let them enabled, they won't break your bank (if you selected a cheap model) so try it out and decide for yourself.

Conclusion

Here we are now.

We have our very own chat platform to use, which is way cheaper than any subscription could ever be. And even better, we can brag before our friends, although they probably won't understand a thing of it ^^'

Create your own LLM chat, where you pay what you use

You ever wanted to use better AI Models, but they're just too expensive? In this post, you'll learn how to set up a platform where you pay what you use. Spoiler, it's cheaper than any subscription I know of ^^